Learning to gather information via imitation

Abstract

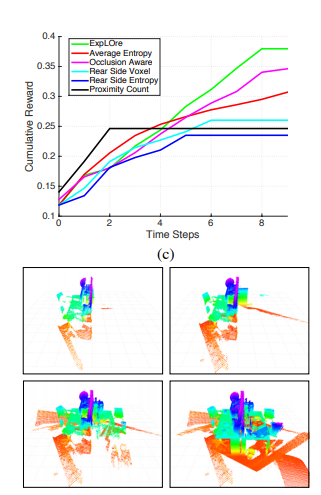

The budgeted information gathering problem - where a robot with a fixed fuel budget is required to maximize the amount of information gathered from the world - appears in practice across a wide range of applications in autonomous exploration and inspection with mobile robots. Although there is an extensive amount of prior work investigating effective approximations of the problem, these methods do not address the fact that their performance is heavily dependent on distribution of objects in the world. In this paper, we attempt to address this issue by proposing a novel data-driven imitation learning framework. We present an efficient algorithm, EXPLORE, that trains a policy on the target distribution to imitate a clairvoyant oracle - an oracle that has full information about the world and computes non-myopic solutions to maximize information gathered. We validate the approach on a spectrum of results on a number of 2D and 3D exploration problems that demonstrates the ability of EXPLORE to adapt to different object distributions. Additionally, our analysis provides theoretical insight into the behavior of EXPLORE. Our approach paves the way forward for efficiently applying data-driven methods to the domain of information gathering.